Publications

2022

X. Xu L. Wang A. Pérard-Gayot R. Membarth C. Li C. Yang P. Slusallek

Temporal Coherence-based Distributed Ray Tracing of Massive Scenes

Publication Type: Article,

IEEE Transactions on Visualization and Computer Graphics (TVCG), 30(2): 1489-1501, 2022

@article{xiang2022distrt,

author = {Xu, Xiang and Wang, Lu and Pérard-Gayot, Arsène and Membarth, Richard and Li, Cuiyu and Yang, Chenglei and Slusallek, Philipp},

title = {Temporal Coherence-Based Distributed Ray Tracing of Massive Scenes},

journal = {IEEE Transactions on Visualization and Computer Graphics (TVCG)},

pages = {1489--1501},

volume = {30},

number = {2},

year = 2022,

month = nov,

date = {2022-11-07},

doi = {10.1109/TVCG.2022.3219982},

publisher = {IEEE}

}

2021

P. Amiri A. Pérard-Gayot R. Membarth P. Slusallek R. Leißa S. Hack

FLOWER: A Comprehensive Dataflow Compiler for High-Level Synthesis

Publication Type: Conference,

Proceedings of the 2021 International Conference on Field-Programmable Technology (FPT), pp. 1-9, Auckland, New Zealand, December 6-10, 2021

@inproceedings{amiri2021flower,

author = {Amiri, Puya and Pérard-Gayot, Arsène and Membarth, Richard and Slusallek, Philipp and Leißa, Roland and Hack, Sebastian},

address = {Auckland, New Zealand},

booktitle = {Proceedings of the 2021 International Conference on Field-Programmable Technology (FPT)},

title = {{FLOWER}: A Comprehensive Dataflow Compiler for High-Level Synthesis},

pages = {1--9},

%year = 2021,

%month = dec,

date = {2021-12-06/2021-12-10},

doi = {10.1109/ICFPT52863.2021.9609930},

organization = {IEEE}

}

R. Ravedutti Lucio Machado J. Schmitt S. Eibl J. Eitzinger R. Leißa S. Hack A. Pérard-Gayot R. Membarth H. Köstler

tinyMD: Mapping Molecular Dynamics Simulations to Heterogeneous Hardware using Partial Evaluation

Publication Type: Article,

Journal of Computational Science (JOCS), 54(101425): 1-11, 2021

@article{ravedutti2021tinymd,

author = {Ravedutti Lucio Machado, Rafael and Schmitt, Jonas and Eibl, Sebastian and Eitzinger, Jan and Leißa, Roland and Hack, Sebastian and Pérard-Gayot, Arsène and Membarth, Richard and Köstler, Harald},

title = {{tinyMD}: Mapping Molecular Dynamics Simulations to Heterogeneous Hardware using Partial Evaluation},

journal = {Journal of Computational Science (JOCS)},

pages = {1--11},

volume = {54},

number = {101425},

year = 2021,

month = jul,

date = {2021-07-10},

doi = {10.1016/j.jocs.2021.101425},

publisher = {Elsevier}

}

2020

M. A. Özkan A. Pérard-Gayot R. Membarth P. Slusallek R. Leißa S. Hack J. Teich F. Hannig

AnyHLS: High-Level Synthesis with Partial Evaluation

Publication Type: Article,

IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems (TCAD) (Proceedings of CODES+ISSS 2020), 39(11): 3202-3214, 2020

@article{oezkan2020anyhls,

author = {Özkan, M. Akif and Pérard-Gayot, Arsène and Membarth, Richard and Slusallek, Philipp and Leißa, Roland and Hack, Sebastian and Teich, Jürgen and Hannig, Frank},

title = {{AnyHLS}: High-Level Synthesis with Partial Evaluation},

journal = {IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems (TCAD) (Proceedings of CODES+ISSS 2020)},

pages = {3202--3214},

volume = {39},

number = {11},

year = 2020,

month = sep,

date = {2020-09-20/2020-09-25},

doi = {10.1109/TCAD.2020.3012172},

publisher = {IEEE}

}

2019

A. Pérard-Gayot R. Membarth R. Leißa S. Hack P. Slusallek

Rodent: Generating Renderers without Writing a Generator

Publication Type: Article,

ACM Transactions on Graphics (TOG) (Proceedings of SIGGRAPH 2019), 38(4): 40:1-40:12, 2019

@article{perard2019rodent,

author = {Pérard-Gayot, Arsène and Membarth, Richard and Leißa, Roland and Hack, Sebastian and Slusallek, Philipp},

title = {Rodent: Generating Renderers without Writing a Generator},

journal = {ACM Transactions on Graphics (TOG) (Proceedings of SIGGRAPH 2019)},

pages = {40:1--40:12},

volume = {38},

number = {4},

year = 2019,

month = jul,

date = {2019-07-28/2019-08-01},

doi = {10.1145/3306346.3322955},

publisher = {ACM}

}

2018

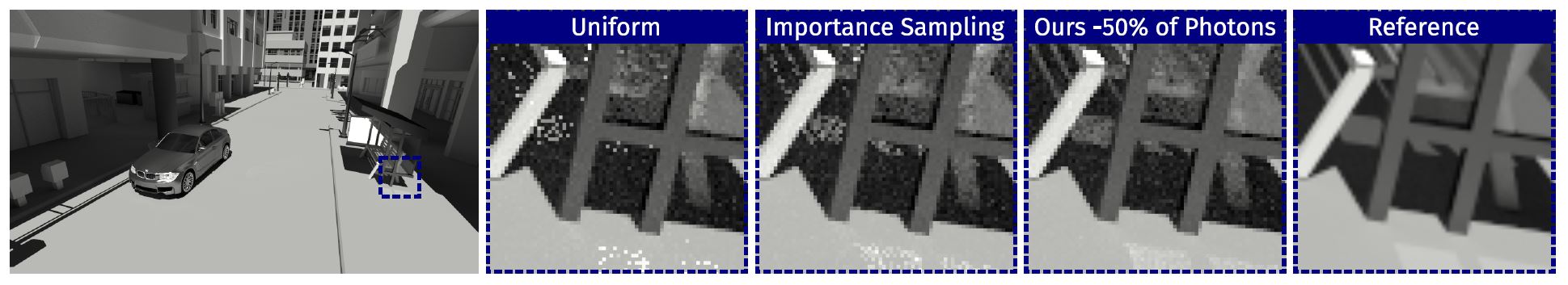

P. Grittmann A. Pérard-Gayot P. Slusallek J. Krivanek

Efficient Caustic Rendering with Lightweight Photon Mapping

Publication Type: Article,

Computer Graphics Forum (Proceedings of the 29th Eurographics Symposium on Rendering), 37(4): 133-142, 2018

@article {Grittmann:2018:ECR,

author = {Pascal Grittmann and Ars{\`{e}}ne P{\'{e}}rard-Gayot and Philipp Slusallek and Jaroslav K{\v{r}}iv{\'a}nek},

title = {Efficient Caustic Rendering with Lightweight Photon Mapping},

journal = {Computer Graphics Forum},

note = {EGSR~'18},

volume = {37},

number = {4},

issn = {1467-8659},

year = {2018},

}

R. Leißa K. Boesche S. Hack A. Pérard-Gayot R. Membarth P. Slusallek A. Müller B. Schmidt

AnyDSL: A Partial Evaluation Framework for Programming High-Performance Libraries

Publication Type: Article, HiPEAC 2018 Paper Award

Proceedings of the ACM on Programming Languages (PACMPL), 2(OOPSLA): 119:1-119:30, 2018

Proceedings of the ACM on Programming Languages (PACMPL), 2(OOPSLA): 119:1-119:30, 2018

@article{leissa2018anydsl,

author = {Leißa, Roland and Boesche, Klaas and Hack, Sebastian and Pérard-Gayot, Arsène and Membarth, Richard and Slusallek, Philipp and Müller, André and Schmidt, Bertil},

title = {{AnyDSL}: A Partial Evaluation Framework for Programming High-Performance Libraries},

journal = {Proceedings of the ACM on Programming Languages (PACMPL)},

pages = {119:1--119:30},

volume = {2},

number = {OOPSLA},

%year = 2018,

%month = nov,

date = {2018-11-04/2018-11-09},

note = {{HiPEAC 2018 Paper Award}},

doi = {10.1145/3276489},

publisher = {ACM}

}

M. A. Özkan A. Pérard-Gayot R. Membarth P. Slusallek J. Teich F. Hannig

A Journey into DSL Design using Generative Programming: FPGA Mapping of Image Border Handling through Refinement

Publication Type: Conference,

Proceedings of the Fifth International Workshop on FPGAs for Software Programmers (FSP), pp. 1-9, Dublin, Ireland, August 31, 2018

@inproceedings{oezkan2018fpgaborderhandling,

author = {Özkan, Mehmet Akif and Pérard-Gayot, Arsène and Membarth, Richard and Slusallek, Philipp and Teich, Jürgen and Hannig, Frank},

address = {Dublin, Ireland},

booktitle = {Proceedings of the Fifth International Workshop on FPGAs for Software Programmers (FSP)},

title = {{A Journey into DSL Design using Generative Programming: FPGA Mapping of Image Border Handling through Refinement}},

pages = {1--9},

year = 2018,

month = aug,

date = {2018-08-31},

organization = {VDE}

}

A. Pérard-Gayot R. Membarth P. Slusallek S. Moll R. Leißa S. Hack

A Data Layout Transformation for Vectorizing Compilers

Publication Type: Conference,

Proceedings of the 2018 Workshop on Programming Models for SIMD/Vector Processing (WPMVP), pp. 7:1-7:8, Vösendorf / Vienna, Austria, February 24, 2018

@inproceedings{perard2018splitalloca,

author = {Pérard-Gayot, Arsène and Membarth, Richard and Slusallek, Philipp and Moll, Simon and Leißa, Roland and Hack, Sebastian},

address = {Vösendorf / Vienna, Austria},

booktitle = {Proceedings of the 2018 Workshop on Programming Models for SIMD/Vector Processing (WPMVP)},

title = {{A Data Layout Transformation for Vectorizing Compilers}},

pages = {7:1--7:8},

year = 2018,

month = feb,

date = {2018-02-24},

doi = {10.1145/3178433.3178440},

organization = {ACM}

}

2017

A. Pérard-Gayot J. Kalojanov P. Slusallek

GPU Ray-tracing using Irregular Grids

Publication Type: Article,

Computer Graphics Forum, 36(2): 477-486, 2017

A. Pérard-Gayot M. Weier R. Membarth P. Slusallek R. Leißa S. Hack

RaTrace: Simple and Efficient Abstractions for BVH Ray Traversal Algorithms

Publication Type: Conference,

Proceedings of the 16th ACM SIGPLAN International Conference on Generative Programming: Concepts & Experiences (GPCE), pp. 157-168, Vancouver, BC, Canada, October 23-24, 2017

@inproceedings{perard2017ratrace,

author = {Pérard-Gayot, Arsène and Weier, Martin and Membarth, Richard and Slusallek, Philipp and Leißa, Roland and Hack, Sebastian},

address = {Vancouver, BC, Canada},

booktitle = {Proceedings of the 16th International Conference on Generative Programming: Concepts \& Experiences (GPCE)},

title = {{RaTrace: Simple and Efficient Abstractions for BVH Ray Traversal Algorithms}},

pages = {157--168},

year = 2017,

month = oct,

date = {2017-10-23/2017-10-24},

doi = {10.1145/3136040.3136044},

organization = {ACM}

}

2016

M. Weier T. Roth E. Krujiff A. Hinkenjann A. Pérard-Gayot P. Slusallek Y. Li

Foveated Real-Time Ray Tracing for Head-Mounted Displays

Publication Type: Conference,

Proceedings of the 24th Pacific Conference on Computer Graphics and Applications (PG '16), pp. 289-298, Okinawa, Japan, October 11-14, 2016

© Lehrstuhl Computer Graphik, Universität des Saarlandes, 1999-2025.