@inproceedings{Yazici2025,

booktitle = {Eurographics Symposium on Rendering},

editor = {Wang, Beibei and Wilkie, Alexander},

title = {{Less can be more: A Footprint-driven Heuristic to skip Wasted Connections and Merges in Bidirectional Rendering}},

author = {Yazici, \"{O}mercan and Grittmann, Pascal and Slusallek, Philipp},

year = {2025},

publisher = {The Eurographics Association},

ISSN = {1727-3463},

ISBN = {978-3-03868-292-9},

DOI = {10.2312/sr.20251180}

}

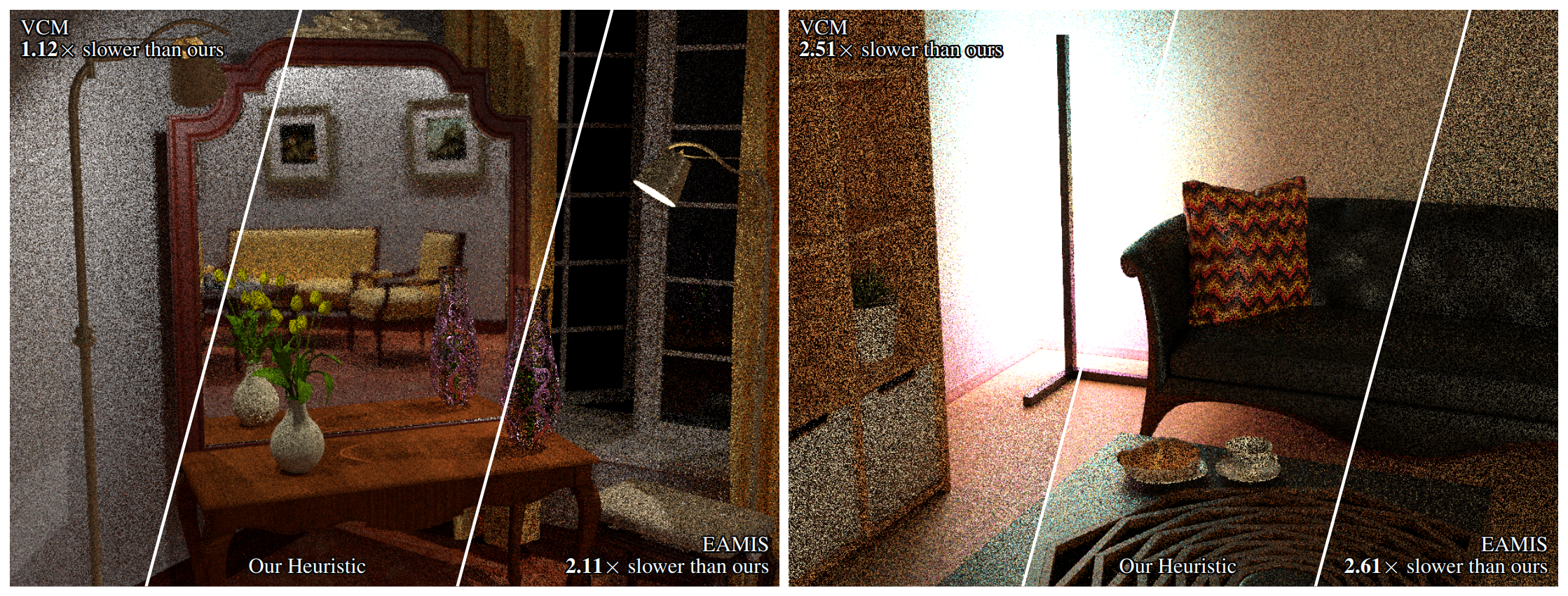

Bidirectional rendering algorithms can robustly render a wide range of scenes and light transport effects. Their robustness stems from the fact that they combine a huge number of sampling techniques: Paths traced from the camera are combined with paths traced from the lights by connecting or merging their vertices in all possible combinations. The flip side of this robustness is that efficiency suffers because most of these connections and merges are not useful – their samples will have a weight close to zero. Skipping these wasted computations is hence desirable. Prior work has attempted this via manual parameter tuning, by classifying materials as “specular”, “glossy”, or “diffuse”, or via costly data-driven adaptation. We, instead, propose a simple footprint-driven heuristic to selectively enable only the most impactful bidirectional techniques. Our heuristic is based only on readily available PDF values, does not require manual tuning, supports arbitrarily complex material systems, and does not require precomputation.