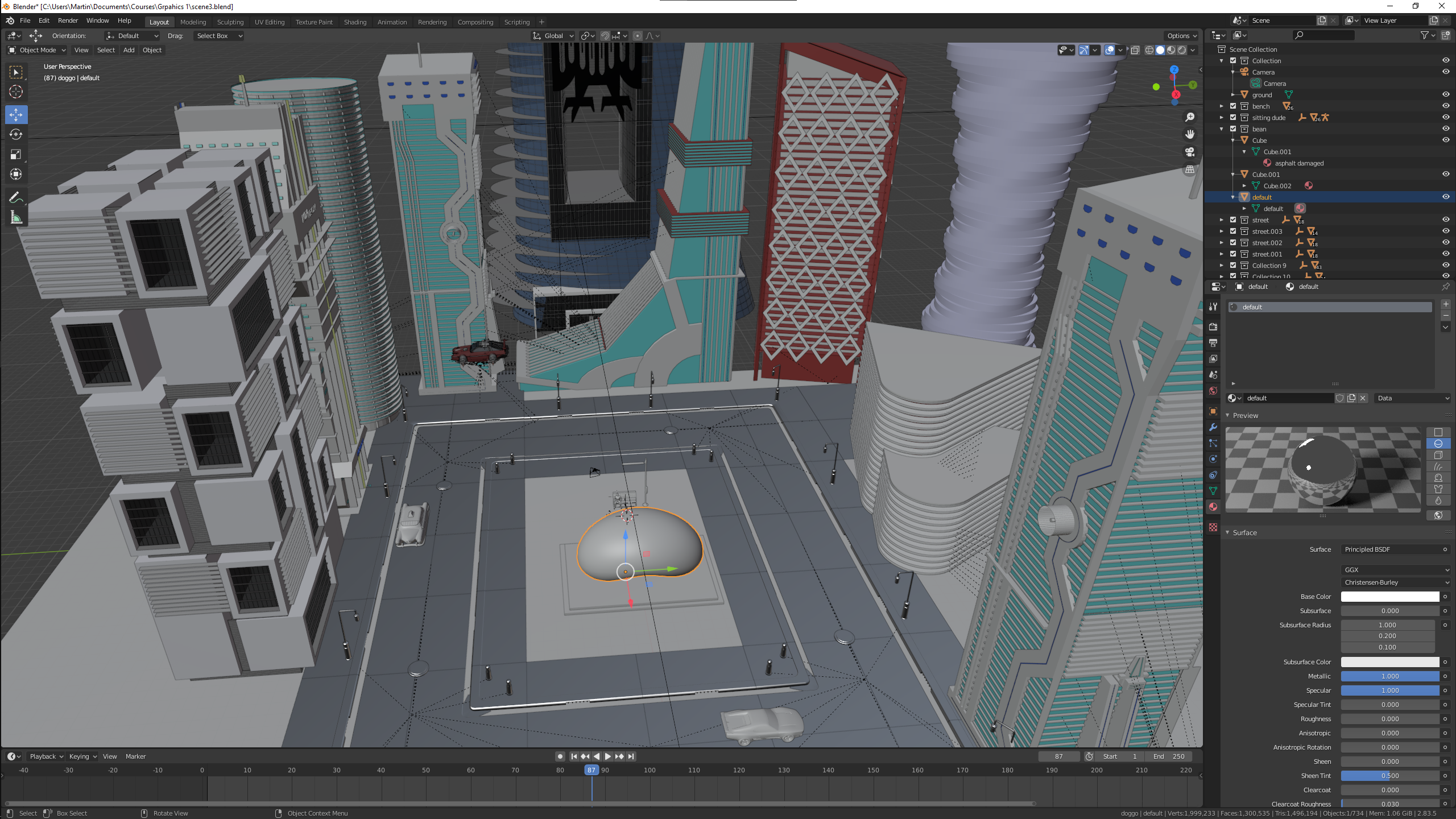

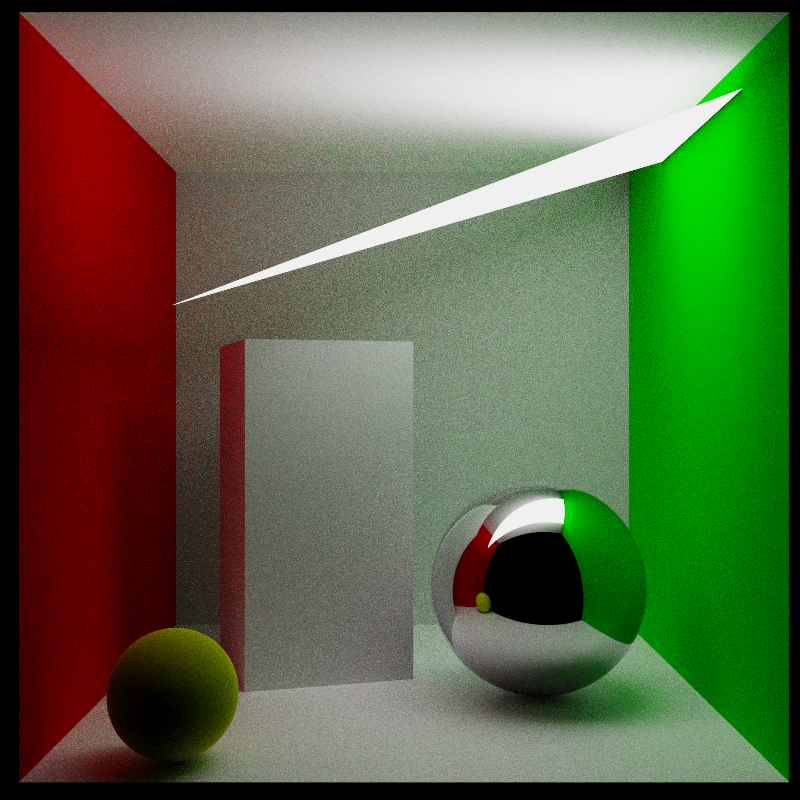

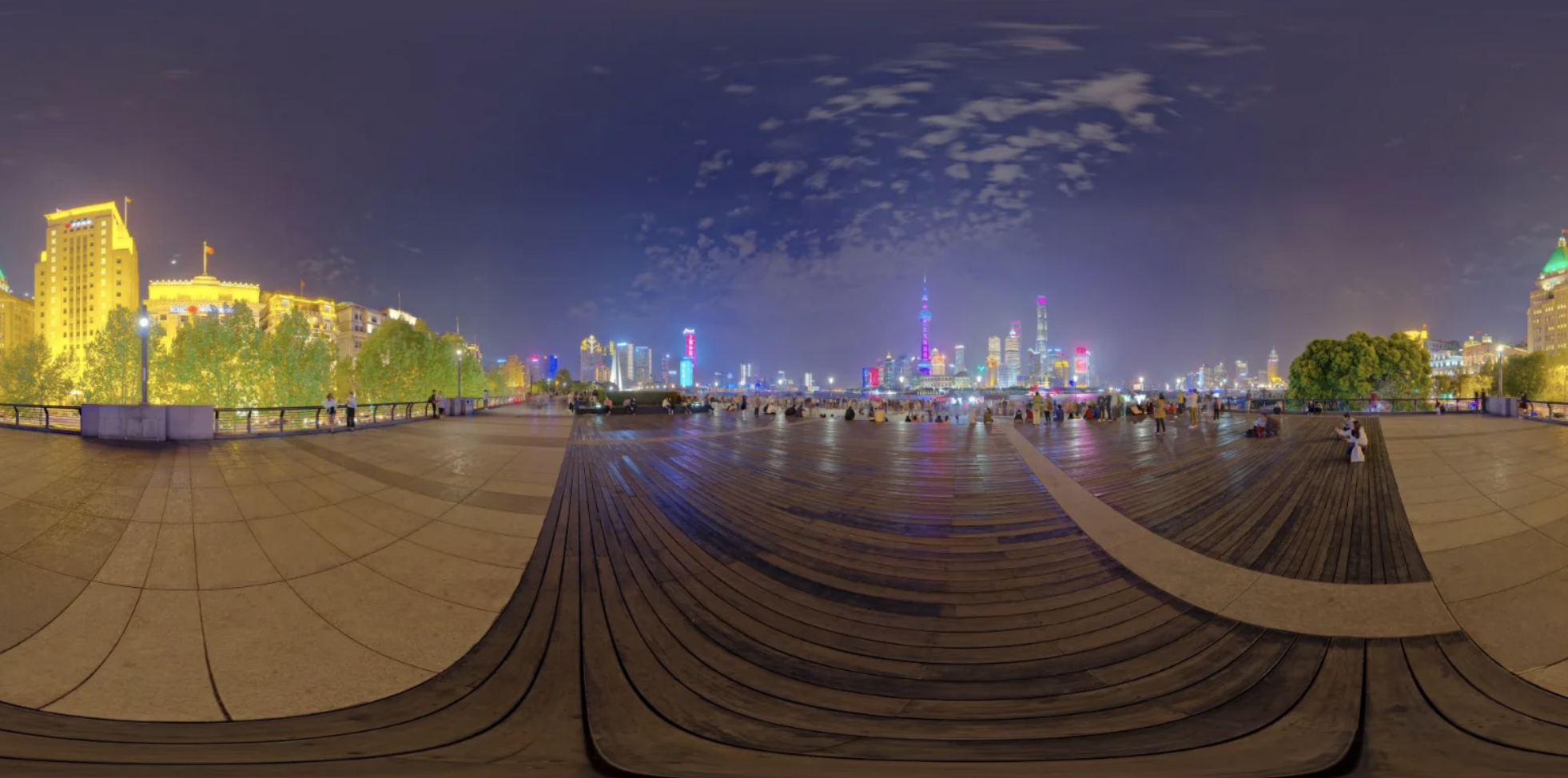

Concept

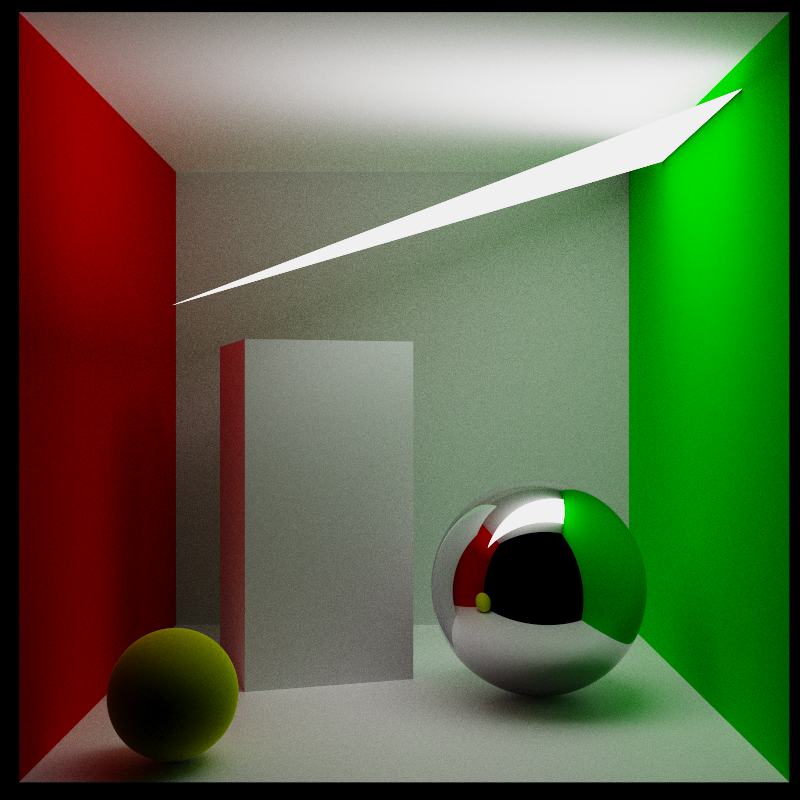

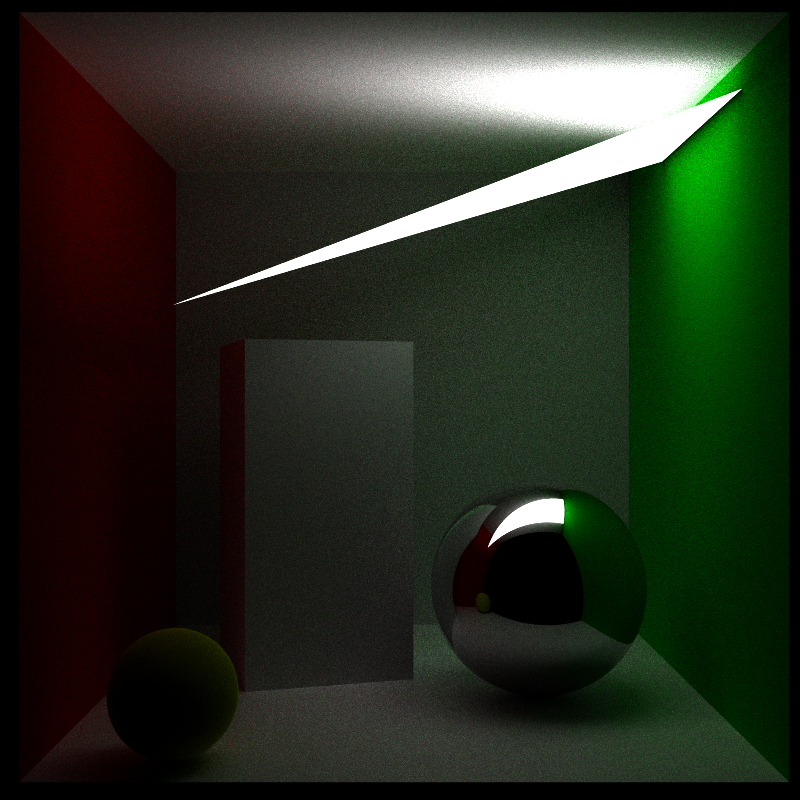

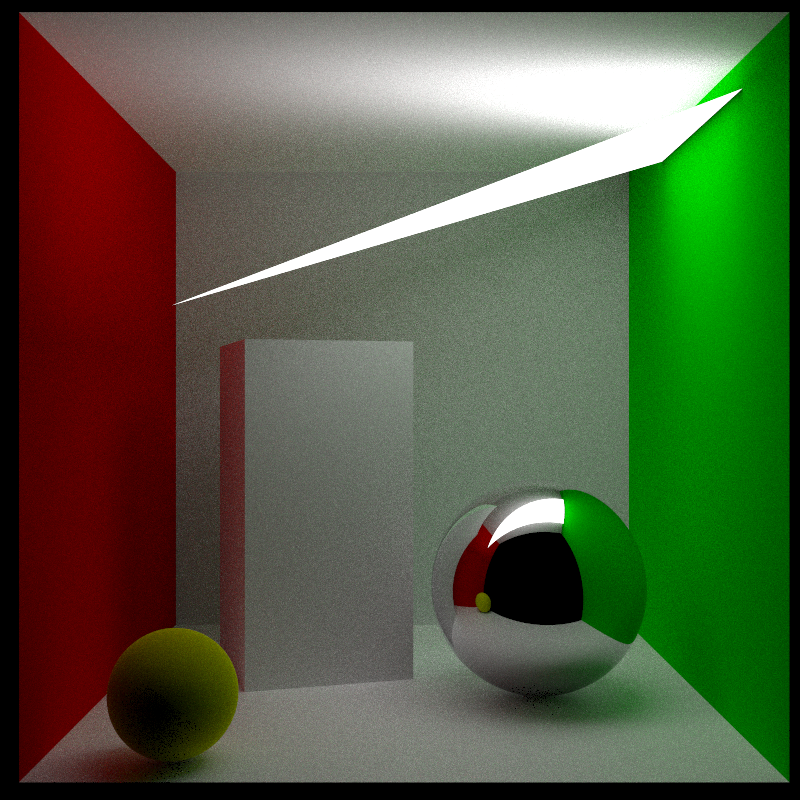

Titled 'Ghost in the Omphalos', our scene is inspired by the cyberpunk anime 'Ghost in the Shell', where ghost refers to the essense of oneself. Omphalos is the greek word for 'navel', which is the concave underside of Cloud Gate ("The Bean"), a public sculpture by artist Sir Anish Kapoor. The Bean with its encapsulated reality takes the center stage of our rendered scene, with a human in its simplest form staring at it from the outside. Despite The Bean beingly seemingly close, human presence is so insignificant in its reflection, being towered by skycrapers and made more void by the gleaming lights at night. Our work examines the ambiguities of the distorted reality, provokes thoughts on humanness and the immaterial materiality in a futuristic setting.

We used OpenMP's dynamic multithreading to spread the rendering load over the ten cores of an Intel 10900X CPU. We rendered, on average, 1200 samples per pixel for the 1440p image, taking 8 hours. Our scene used 1.3 million triangles.